The economics ot save Tiwai may stack up as what I have gathered from the news,(not always accurate) is there will be a direct loss of 1000 job and and a further loss of 1600 associated jobs plus the cost of connecting Manipuri to the national grid is put at $400m. At a guess i would say that the cost of the unemployment benefits and their administration would be north of $50m a year and add to that the clean up cost to the company of $200m a negotiated settlement should be achieved. The current statements by both sides I would think are hard line statements before any negotiations start behind closed doorsI would tend to agree that Tiwai is less tenable than the other two. That said it is something I wish could be retained rather than just shut down. Only three out of the top 10 major aluminium companies are not Chinese or Russian, that presents a significant risk if trade relations collapse and embargoes eventuate.

NZDF General discussion thread

- Thread starter NZLAV

- Start date

Takao

The Bunker Group

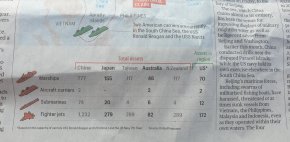

Bugger somebody's leaked our secrets. And our four SSNs as well. The bastards. At least they didn't find out about the CVsView attachment 47511

I think it's a little out of date - but it looks like the real reason for the Australian FSP has been leaked.

I knew it wouldn't stop at the All Blacks....

(Source: )

Sneaky sneaky little buggers ! you have been holding out on us !!Bugger somebody's leaked our secrets. And our four SSNs as well. The bastards. At least they didn't find out about the CVs

Nighthawk.NZ

Well-Known Member

Finally, we seem to have the defence force numbers that Aussie can't complain about... ???? lolBugger somebody's leaked our secrets. And our four SSNs as well. The bastards. At least they didn't find out about the CVs

Redlands18

Well-Known Member

Ok i can understand why you want to keep secret the fact that NZ is secretly a Superpower, but the one i cant work is why NZ is matching Taiwan Ship for Ship and Jet for JetFinally, we seem to have the defence force numbers that Aussie can't complain about... ???? lol

Nighthawk.NZ

Well-Known Member

You do realise it is the other way around... that Taiwan is matching us... lolOk i can understand why you want to keep secret the fact that NZ is secretly a Superpower, but the one i cant work is why NZ is matching Taiwan Ship for Ship and Jet for Jet

Redlands18

Well-Known Member

Sorry but i have to let you know that fantasy posts are not allowed on DTYou do realise it is the other way around... that Taiwan is matching us... lol

Nighthawk.NZ

Well-Known Member

But the newspaper article is saying that it is true... we believe everything that goes to print because our journalists are top-notch... lol ;-)Sorry but i have to let you know that fantasy posts are not allowed on DT

Last edited:

Nighthawk.NZ

Well-Known Member

New Zealand Defence Force websites appear to be down

www.stuff.co.nz

www.stuff.co.nz

Wonders if they were hacked from China because of we suspended our extradition treaty with Hong Kong... (not saying it is ... just timing they went down lol) some one forgot to pay the web host most likely lol

None of the public defence force websites were working this afternoon...

Stuff

Wonders if they were hacked from China because of we suspended our extradition treaty with Hong Kong... (not saying it is ... just timing they went down lol) some one forgot to pay the web host most likely lol

None of the public defence force websites were working this afternoon...

Last edited:

If that's the case thought that they'd have taken the MOD sight down as well, but I got through there earlier tonight ok and still can.New Zealand Defence Force websites appear to be down

Stuff

www.stuff.co.nz

Wonders if they were hacked from China because of we suspended our extradition treaty with Hong Kong... (not saying it is ... just timing they went down lol) some one forgot to pay the web host most likely lol

None of the public defence force websites were working this afternoon...

Nighthawk.NZ

Well-Known Member

It most likely someone forgot to pay the bill or that they put the cables in backwards after an upgrade.... like I said it was just the timing of it...If that's the case thought that they'd have taken the MOD sight down as well, but I got through there earlier tonight ok and still can.

Although on saying that it wouldn't suprise me one bit if it was...

Gracie1234

Well-Known Member

I would not be surprised if an external party was involved, that or just poor change management. But considering how long they were down it is a concern being an IT guy.

Could be overloaded with all the added interest post covid? A good thing, defence can finally fill all those vacant paralines and the govt may actually have to put their money where theirs mouths are and fullfill those army expansion plans they have touted.

A good time for all services, including police, to get their numbers back upto strength and no doubt they could even afford to be more selective as well instead of merely bums in seats in certain cases, best outcome from worst case scenario.

A good time for all services, including police, to get their numbers back upto strength and no doubt they could even afford to be more selective as well instead of merely bums in seats in certain cases, best outcome from worst case scenario.

Well still down "...Due to a technical issue at one of our data centres, visitors to nzdf.mil.nz, navy.mil.nz, army.mil.nz and airforce.mil.nz are currently being redirected to this website..." (Defence careers site).I would not be surprised if an external party was involved, that or just poor change management. But considering how long they were down it is a concern being an IT guy.

As an IT support man myself I'd have to say a multi-day outage at a datacentre most likely means one of two things (1) the datacentre is no more than a 10 year old PC in a non-airconditioned back room and the system admin is a 7 year old... or (2) something has happened that they'd rather not make public knowledge and is being very closely monitored at senior levels!

This is so vastly atypical to your normal expectation of an IT outage - especially if it does involve a data centre. Either option is rather concerning, but for very different reasons.

Gracie1234

Well-Known Member

Considering the ease and the relative low cost of Cyber-warfare i would consider that this is an area that should be invested in more heavily. Australia has announced $1.5B, that is a lot. I have to admit I am not aware of NZ capabilities, we have increased our investment in the NZDF but that seemed to be hardening our network from attack not so much in offense capability. I would not be surprised if the countries offensive capability resides within the GCSB, but do not know.

Nighthawk.NZ

Well-Known Member

Pretty sure there was an increase in spending on cyber warfare in the DCP 2019 and there was an increase on this a few years back as well...Considering the ease and the relative low cost of Cyber-warfare i would consider that this is an area that should be invested in more heavily. Australia has announced $1.5B, that is a lot. I have to admit I am not aware of NZ capabilities, we have increased our investment in the NZDF but that seemed to be hardening our network from attack not so much in offense capability. I would not be surprised if the countries offensive capability resides within the GCSB, but do not know.

You were so close - according to reddit it was hosted on 12 year old SAN - the disk array died and they don’t have appropriate backupsAs an IT support man myself I'd have to say a multi-day outage at a datacentre most likely means one of two things (1) the datacentre is no more than a 10 year old PC in a non-airconditioned back room and the system admin is a 7 year old... or (2) something has happened that they'd rather not make public knowledge and is being very closely monitored at senior levels!

This is so vastly atypical to your normal expectation of an IT outage - especially if it does involve a data centre. Either option is rather concerning, but for very different reasons.

I assume the details are in this paywalled article but I don’t have a sub - but the blurb you can see deems them unrecoverable.

Online casualties: NZ Defence Force websites now deemed unrecoverable

OMG if there's one thing most important in life for a SysAdmin it's ensuring you backup, backup the backups, and shift them quickly to secure off-site storage. 'Secure' to SysAdmin of course not only implies protection against theft, but to ensure if anything affects access to the building and/or IT systems such as fire, earthquake (esp. in NZ), man-made acts etc. By doing so you ensure you safeguard the option of being able to setup shop somewhere else and recover data.You were so close - according to reddit it was hosted on 12 year old SAN - the disk array died and they don’t have appropriate backups

I assume the details are in this paywalled article but I don’t have a sub - but the blurb you can see deems them unrecoverable.

Online casualties: NZ Defence Force websites now deemed unrecoverable

They've done themselves untold reputational damage in my book alone. What is more concerning is what other critical data they've lost... it's almost a given it won't just be websites hosted on that disk array!

I digress, but here's a story! Many years ago working in a hosted datacentre (major name in IT in those days, somewhat less so now funnily enough!) when I was helping the boss put a panel back on the front of a 1 week old UPS (literally just after floor tiles had been cut to size, the unit having been commissioned 2-3 days before).

I had my end seated in and he went to slot his end back in when he somehow managed to pull my end out and across a 400v busbar that showered me in molten copper from a metre away. BANG!... datacentre completely dead except for the sound of alarms going off everywhere... under emergency lighting only! I had instinctively jumped back with a hell of a fright but was fine. I then saw the boss frozen to the spot holding the panel & I immediately thought he's 'live' so grabbed a piece of packing case timber to remove him from the source... I'm about to whack him with the wood when he looks at me an just says calmly... 'bugger that'll take some explaining', and stood up...he was fine! (wouldn't have been 5 seconds later after I had whacked him!)

Next we had engineers crashing their way into the datacentre & people going in all directions, and then the phones started... customers wondering why their IT systems had gone offline! Wow that was a fun day at work... we were up & running at 80% within 90 minutes. Learnt a lot that day!

The mobile phone network operator I used to work for had its datacentre backed up to a separate site in the same town in England when I joined it. It built a new & much bigger one in Ireland a few years later.

I recall a power cut while I was working there. Lights & screens flickered, then went back to normal. Other buildings nearby (e.g. the nearest pub) took a while to get electricity back.

I recall a power cut while I was working there. Lights & screens flickered, then went back to normal. Other buildings nearby (e.g. the nearest pub) took a while to get electricity back.